Be aware that a few visual examples contain language and topics that some may find disturbing and offensive. The dark web provides an anonymous environment that some people choose to use for nefarious purposes. These tools help build cases to deter, catch, and convict those engaged in illegal activity.

The Challenge

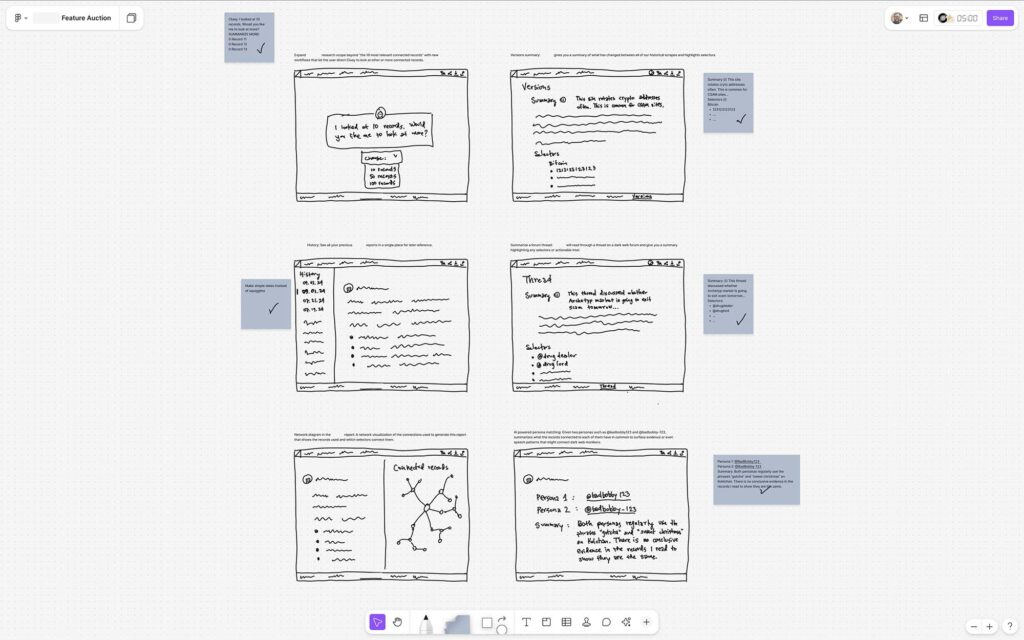

Our team was excited about using AI to assist with intelligence building and finding useful clues within our ever-growing collection of dark web records. But we knew we didn’t just want to build another chat bot. So we took some time to come up with ideas and get feedback from the team and customers.

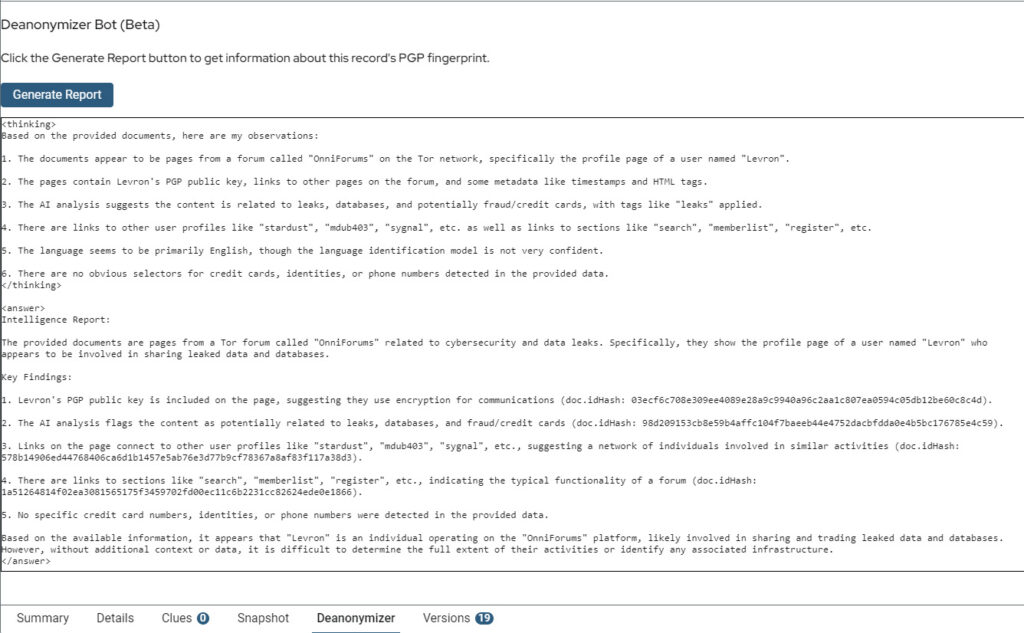

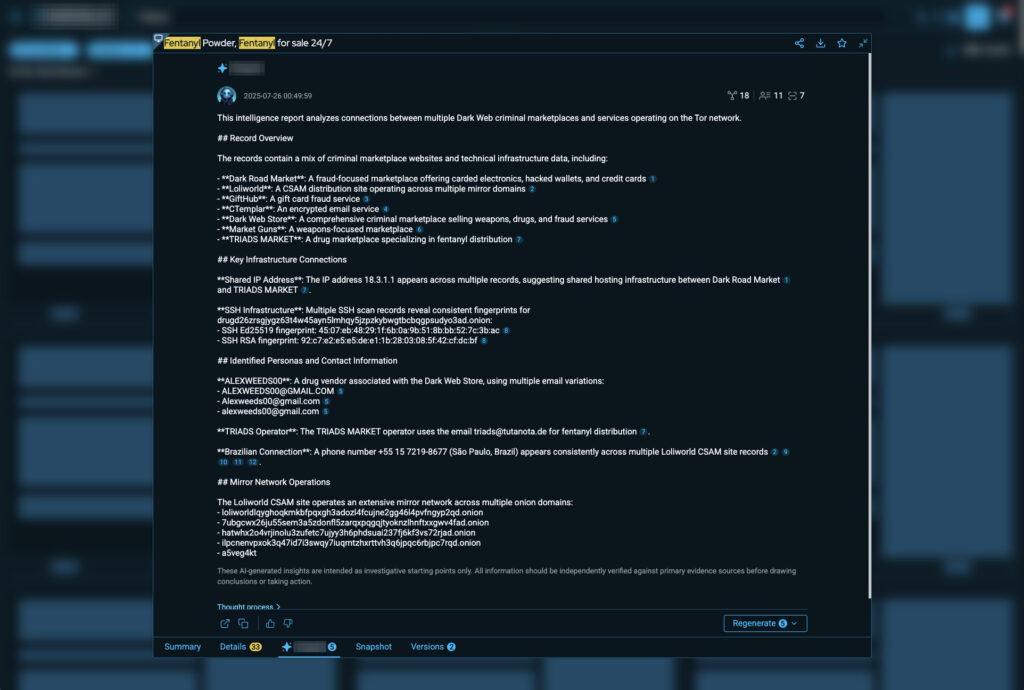

We narrowed it down to two ideas and after some rough tests and prototypes we had our decision: Expose useful clues in a summary report with one click from any record (card).

Important UX Design direction

- Keep it simple for the user

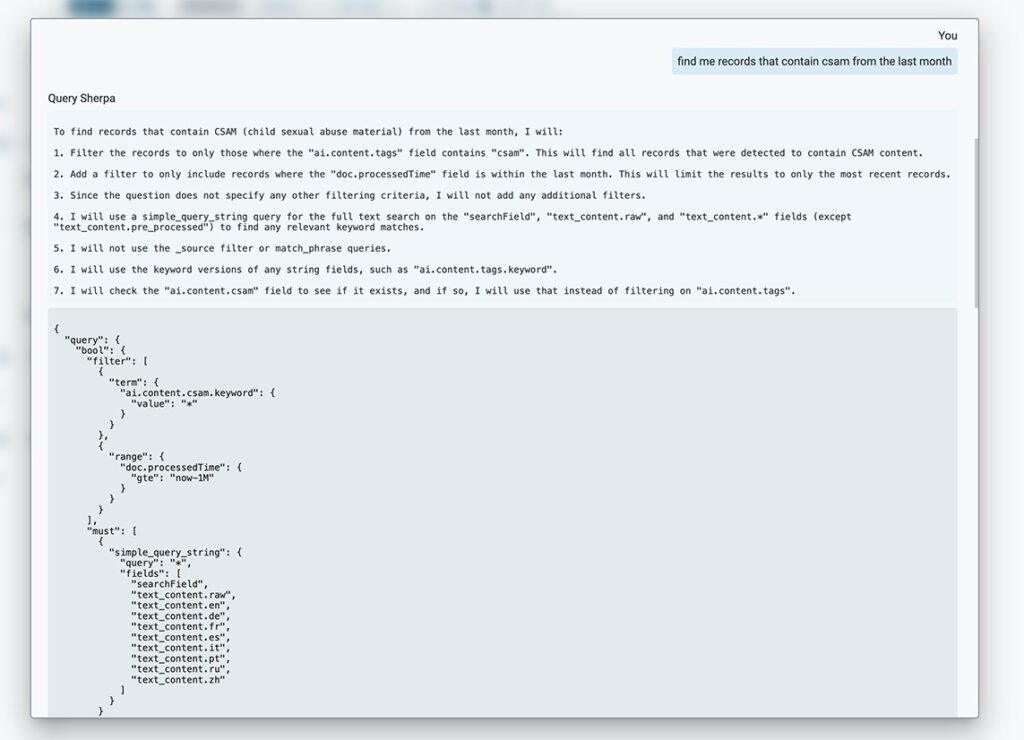

- No need for the user to write prompts

- No need for the user to learn any handrails

- We should handle as much of the complexity as possible

This required our team to fully understand the prompting instructions, learn which LLMs provide the best results and how to avoid “naughty blocking” (our term for when an LLM refused to analyze records due to the salty and often offensive language of dark web users), and run lots of tests. We didn’t worry about polishing the UI of the tests. We were in Discovery mode.

Discoveries

From our research, observations, and user feedback, we found…

- We needed to set precise boundaries (prompts, handrails, token usage).

- We needed a way to rate the LLM generated reports and allow users to provide feedback.

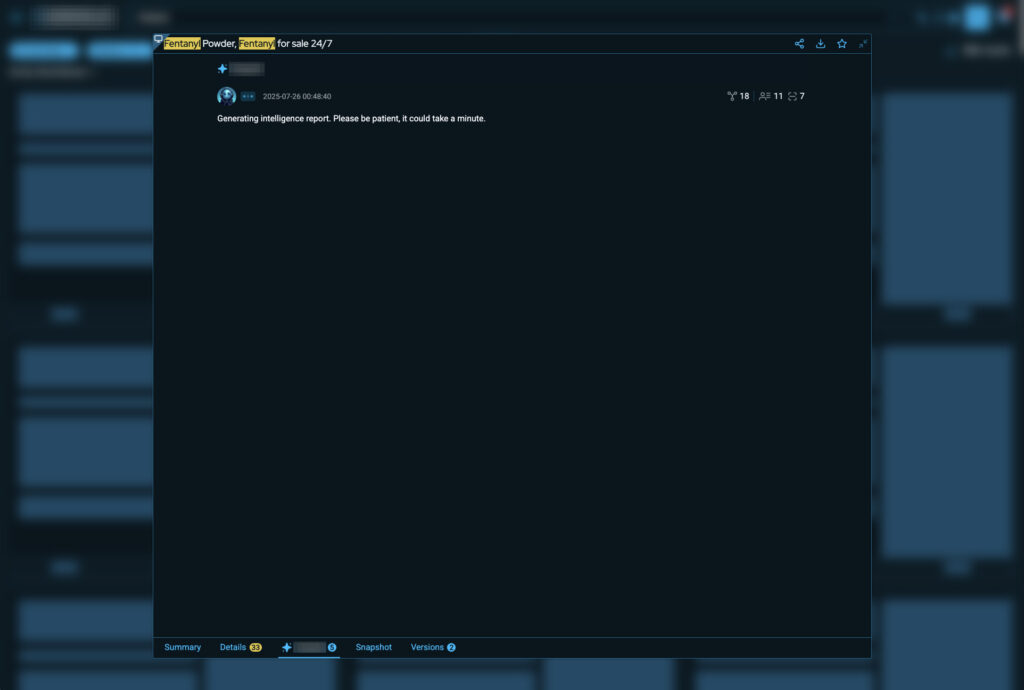

- There is a time delay while the LLM is ingesting the data and then the response is returned in chunks.

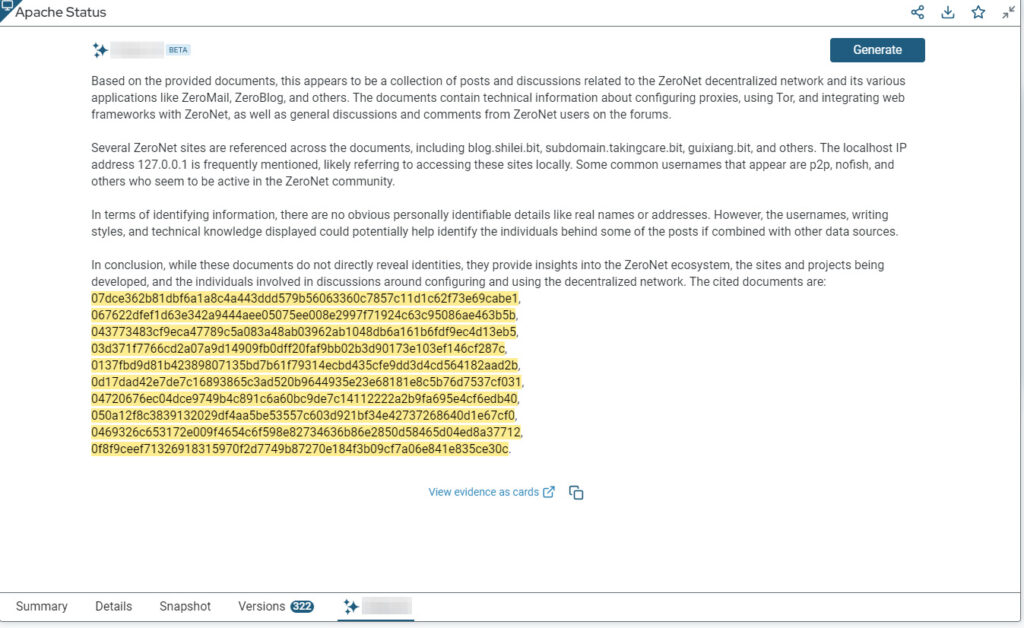

- We needed a way to convey data credibility in the reports to the user.

After a number of iterations of testing, we decided…

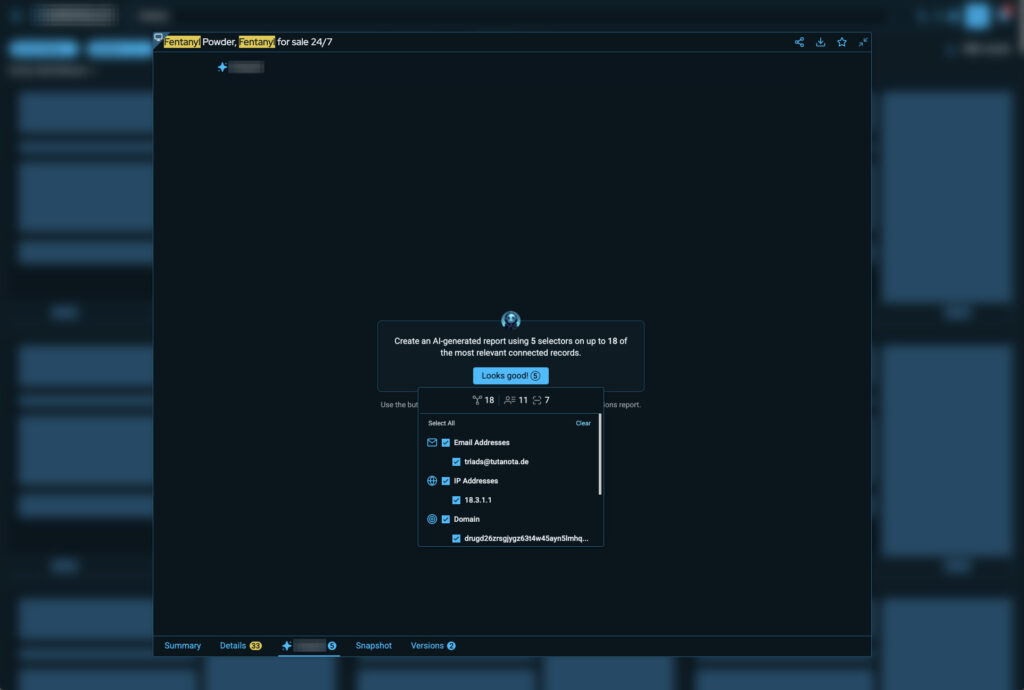

- To limit the amount of tokens needed, we decided to only allow the ten most related records to be included as part of the prompt.

- We added thumbs-up and thumbs-down icons at the bottom of every report as an easy way to rate reports and an optional form field to allow users to provide detailed feedback directly to a specific Slack channel that the team monitored daily.

- To inform the user the report generation started, we added a small, animated triplet of dots. As the response data started returning from the LLM, we chose to render it smoothly in real-time as if was being written.

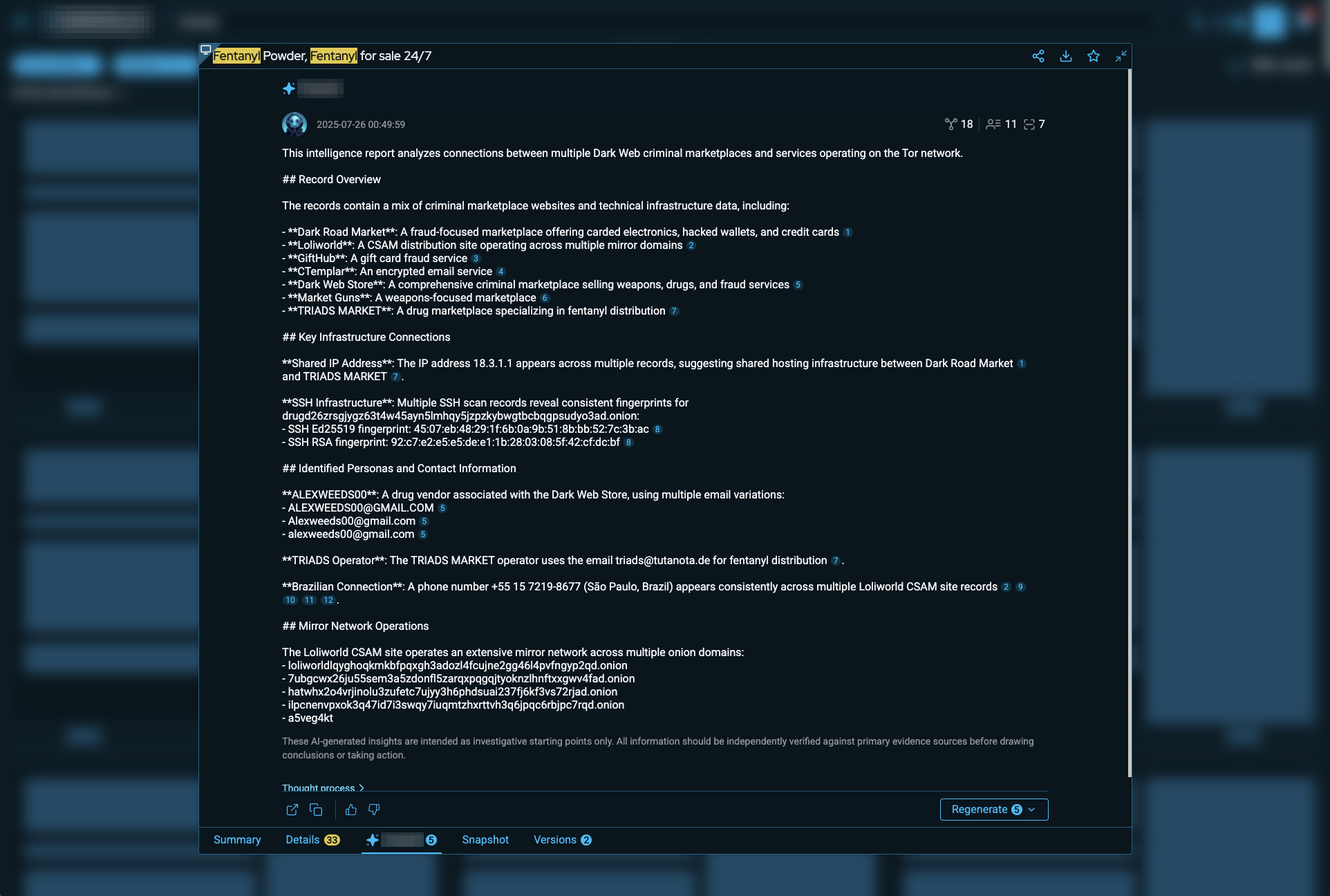

- Add source evidence reference links (like Perplexity) within the report.

Lessons learned

- AI is very useful but requires lots of testing and moderation

We learned quickly that using AI to produce useful reports requires setting very precise boundaries, teaching users to scrutinize the data (don’t just accept it as hard facts), and provide a way to gather user feedback easily. - Understand your constraints

Designing for this feature introduced unique challenges: tokens, time delays for LLM processing, AI data hallucinations, detailed text prompts, need for immediate user feedback, limiting the number of related records, - Stay curious and open-minded

Technology is always evolving. Cultivating a growth mindset is critical as a UX practitioner especially in this new era of AI. Pay attention to how others are tackling problems and use it as inspiration to serve and delight your users.

Customer quotes

I really liked the [feature] summary. It shows the important information upfront and allows for a quicker workflow, which is always a positive when working these cases.”

I’ve been having a blast with [this feature]. It’s been really interesting to be able to understand the content. When it does provide feedback about CSAM material, it’s been very helpful, and it’s been helping my mental health. [It] says, ‘Here’s what’s going on. You don’t have to read the horrible, gory things.